Breeze项目是深圳睿云智合所开源的Kubernetes图形化部署工具,大大简化了Kubernetes部署的步骤,其最大亮点在于支持全离线环境的部署,且不需要翻墙获取Google的相应资源包,尤其适合某些不便访问互联网的服务器场景。(项目地址 https://github.com/wise2c-devops/breeze )

Breeze开源工具由以下子项目构成:

- playbook(breeze) 该项目由不同的ansible playbook构成,分别是docker、etcd、registry、kubernetes

- yum-repo 该项目用于为安装过程中提供离线的yum repo源,包含了docker、kubelet、kubectl、kubeadm、kubernetes-cni、docker-compose等rpm包库,除此之外我们还包括了可能会用到的ceph及nfs相关rpm

- deploy-ui 用户前端UI,采用vue.js框架实现

- pagoda 实现了对ansible脚本调用的API集

- kubeadm-version 输出kubernetes组件镜像版本信息

- haproxy 用于安装负载均衡的镜像及启动脚本

- keepalived 为负载均衡实现统一入口虚IP的组件镜像及启动脚本

Breeze软件架构简图:

用户通过Breeze工具,只需要一台安装有Docker及docker-compose命令的服务器,连接互联网下载一个对应Kubernetes版本的docker-compose.yaml文件即可将部署程序运行出来,对部署机而已,只需能有普通访问互联网的能力即可,无需翻墙,因为我们已经将所有Kubernetes所需要的docker镜像以及rpm包内置于docker image里了。

如果需要离线安装,也是极其容易的,只需要将docker-compose.yaml文件里涉及的docker镜像保存下来,到了无网环境预先使用docker load命令载入,再运行docker-compose up -d命令即可无网运行部署程序。所有被部署的集群角色服务器,完全无需连入互联网。

该项目开源,用户可以很方便的fork到自己的git账号结合travis自动构建出任意Kubernetes版本的安装工具。

在我们的实验环境中准备了六台服务器,配置与角色如下(如果需要增加Minion/Worker节点请自行准备即可):

| 主机名 | IP地址 | 角色 | OS | 组件 |

| deploy | 192.168.9.10 | Breeze Deploy | CentOS 7.5 x64 | docker / docker-compose / Breeze |

| k8s01 | 192.168.9.11 | K8S Master | CentOS 7.5 x64 | K8S Master / etcd / HAProxy / Keepalived |

| k8s02 | 192.168.9.12 | K8S Master | CentOS 7.5 x64 | K8S Master / etcd / HAProxy / Keepalived |

| k8s03 | 192.168.9.13 | K8S Master | CentOS 7.5 x64 | K8S Master / etcd / HAProxy / Keepalived |

| k8s04 | 192.168.9.14 | K8S Minion Node | CentOS 7.5 x64 | K8S Worker |

| registry | 192.168.9.20 | Harbor | CentOS 7.5 x64 | Harbor 1.6.0 |

| 192.168.9.30 | VIP | HA虚IP地址在3台K8S Master浮动 |

步骤:

一、准备部署主机(deploy / 192.168.9.10)

(1)以标准Minimal方式安装CentOS 7.5 (1804) x64之后,登录shell环境,执行以下命令开放防火墙:

setenforce 0

sed –follow-symlinks -i “s/SELINUX=enforcing/SELINUX=disabled/g” /etc/selinux/config

firewall-cmd –set-default-zone=trusted

firewall-cmd –complete-reload

(2)安装docker-compose命令

curl -L https://github.com/docker/compose/releases/download/1.21.2/docker-compose-$(uname -s)-$(uname -m) -o /usr/local/bin/docker-compose chmod +x /usr/local/bin/docker-compose

(3)安装docker

yum install docker

(4)建立部署主机到其它所有服务器的ssh免密登录途径

- a) 生成秘钥,执行:

ssh-keygen

- b) 针对目标服务器做ssh免密登录,依次执行:

ssh-copy-id 192.168.9.11ssh-copy-id 192.168.9.12ssh-copy-id 192.168.9.13ssh-copy-id 192.168.9.14ssh-copy-id 192.168.9.20

二、获取针对K8S某个具体版本的Breeze资源文件并启动部署工具,例如此次实验针对刚刚发布的K8S v1.12.1

curl -L https://raw.githubusercontent.com/wise2ck8s/breeze/v1.12.1/docker-compose.yml -o docker-compose.ymldocker-compose up -d

三、访问部署工具的浏览器页面(部署机IP及端口88),开始部署工作

(1)点击开始按钮后,点击+图标开始添加一个集群:

(2)点击该集群图标进入添加主机界面:

点击右上角“添加主机按钮”:

反复依次添加完整个集群的5台服务器:

点击下一步进行服务组件定义

(3)点击右上角“添加组件”按钮添加服务组件,选择docker,因为所有主机都需要安装,因此无需选择服务器:

再添加镜像仓库组件

备注:registry entry point默认就填写Harbor服务器的IP地址,有些环节可能使用域名则填写域名

继续添加etcd组件,这里我们将其合并部署于k8s master节点,也可以挑选单独的主机进行部署:

最后添加k8s组件,这里分为master和minion nodes:

备注:这里kubernetes entry point是为了HA场景,比如此次试验我们在每一个k8s master节点同时各部署了haproxy和keepalived组件,其产生的虚IP是192.168.9.30,端口是6444,那么我们在这里应该填写为192.168.9.30:6444,如果您只安装一个master,那么可以填写为master的入口,例如192.168.9.11:6443

设置完成的界面如下:

如果要实现高可用HA架构,请提前在部署机准备好以下资源包,详情请参阅:

https://github.com/wise2ck8s/haproxy-k8s

https://github.com/wise2ck8s/keepalived-k8s

(1)haproxy-k8s镜像与启动脚本

(2)keepalived-k8s镜像与启动脚本

在部署机上下载两个镜像:

docker pull wise2c/haproxy-k8s

docker pull wise2c/keepalived-k8s

保存镜像包:

docker save wise2c/haproxy-k8s wise2c/keepalived-k8s -o /root/k8s-ha.tar

拷贝镜像包至所有master节点:

scp /root/k8s-ha.tar 192.168.9.11:/root/

scp /root/k8s-ha.tar 192.168.9.12:/root/

scp /root/k8s-ha.tar 192.168.9.13:/root/

下载启动脚本

curl -L /root/start-haproxy.sh https://raw.githubusercontent.com/wise2ck8s/haproxy-k8s/master/start-haproxy.sh

注意修改上述脚本中的IP地址与您实际场景一致:

MasterIP1=192.168.9.11

MasterIP2=192.168.9.12

MasterIP3=192.168.9.13

curl -L /root/start-keepalived.sh https://raw.githubusercontent.com/wise2ck8s/keepalived-k8s/master/start-keepalived.sh

注意修改上述脚本中的VIP地址和网卡名与您实际场景一致:

VIRTUAL_IP=192.168.9.30

INTERFACE=ens33

拷贝脚本至所有master节点:

chmod +x /root/start-haproxy.sh /root/start-keepalived.sh

scp –p /root/start-haproxy.sh 192.168.9.11:/root/

scp –p /root/start-haproxy.sh 192.168.9.12:/root/

scp –p /root/start-haproxy.sh 192.168.9.13:/root/

scp –p /root/start-keepalived.sh 192.168.9.11:/root/

scp –p /root/start-keepalived.sh 192.168.9.12:/root/

scp –p /root/start-keepalived.sh 192.168.9.13:/root/

四、点击下一步,执行部署流程:

在接下来的部署过程中,屏幕会有日志及图标颜色的动态变化:

当你看见Docker图标颜色变为绿色的时候,表示所有节点的docker已经能正常运行,此时可以不等后续部署过程结束,立刻去所有k8s master节点进行HA组件的启用:

docker load -i /root/k8s-ha.tar

/root/start-haproxy.sh

/root/start-keepalived.sh

然后耐心等待最后部署界面所有组件颜色变为绿色即可结束K8S高可用集群的部署工作。

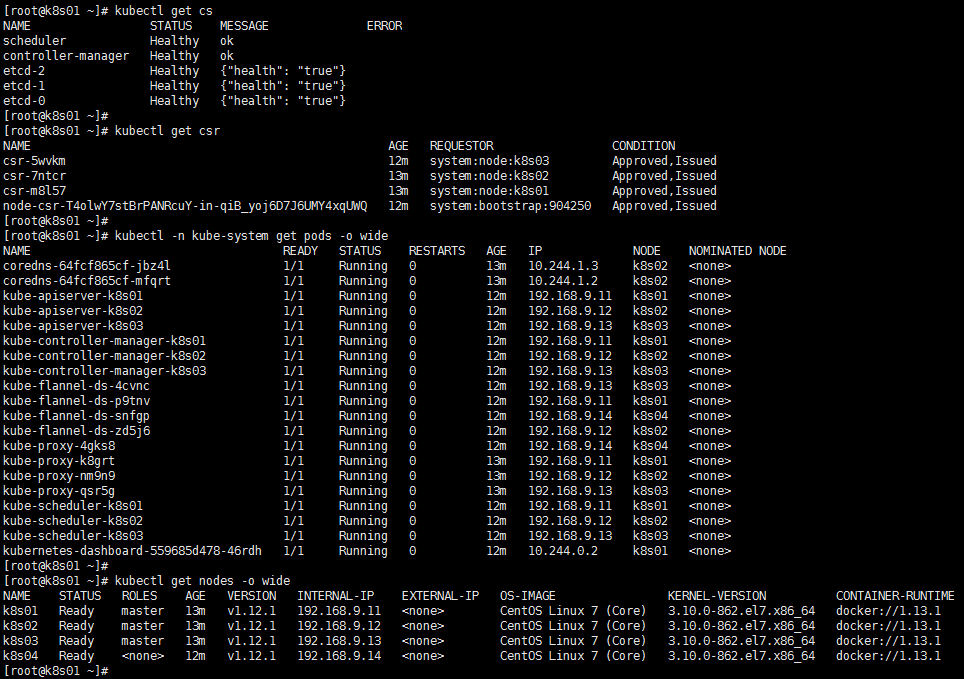

验证:

在此感谢为此项目付出辛勤劳动的同事华相、Ann、Alan。我们将持续为此项目做出改进贡献给社区。

Kubernetes中文社区

Kubernetes中文社区

1.registry是否可以不安装?

2.一主多从(甚至单主机)情况下哪些可以简化?

3.我在安装时候遇到docker安装完没有自动启动,检测安装结果是失败,导致安装停滞

建议你初始化安装后先不着急做更新再试试看,从你的日志来看,ansible在拷贝文件时出现错误,可能和你的python版本有关系。全部按照好了,再做yum update是不冲突的。

1. 这是为了同时兼容离线在线两种安装方式且支持大批量节点部署而做的设计,因此Harbor镜像仓库服务器是必须。

2. Master可以只加一台而不是三台、etcd也是一样;最小1 master、0 node也可以跑起来

3. 你的OS什么版本?是否最小Minimal标准安装的?请贴错误日志以便分析。

1.配置1主3从

2.Centos7.5 Mini版本,初始化安装后,仅yum update更新了基础包

以下为最后日志(k8s失败,其他成功)

Oct 11 2018 08:09:40: [kubernetes] [192.168.10.125] task: pull k8s pause images – ok, message: All items completed

124 Oct 11 2018 08:09:42: [kubernetes] [192.168.10.108] task: pull k8s pause images – ok, message: All items completed

125 Oct 11 2018 08:09:43: [kubernetes] [192.168.10.125] task: tag k8s pause images – ok, message: All items completed

126 Oct 11 2018 08:09:43: [kubernetes] [192.168.10.108] task: tag k8s pause images – ok, message: All items completed

127 Oct 11 2018 08:09:45: [kubernetes] [192.168.10.108] task: copy k8s admin.conf – failed, message: All items completed

128 Oct 11 2018 08:09:45: [kubernetes] [192.168.10.125] task: copy k8s admin.conf – failed, message: All items completed

129 Oct 11 2018 08:09:45: [kubernetes] [all] task: ending – failed, message:

看起来很不错,感谢开源

disablerepo: ‘*’

enablerepo: wise2c

这个源有问题啊,docker安装不上

部署机的2009端口可以被其它节点逐渐访问么?wise2c这个源不是在Internet上的,是部署机程序起来后yum-repo模块服务处的一个源。

笔误:逐渐–>直接

部署机的2009端口可以被其它节点直接访问么(例如curl http://deploy-server-ip:2009)?

Oct 11 2018 07:27:02: [kubernetes] [all] task: ending – failed, message:

仅此一行,错误信息并不完整较难分析;可以在部署机输入命令docker logs -f deploy-main获得更详细的原因。

搭建单master有问题 搭建三master也有问题

66 Oct 11 2018 08:37:33: [kubernetes] [192.168.213.130] task: pull k8s pause images – ok, message: All items completed

67 Oct 11 2018 08:37:33: [kubernetes] [192.168.213.130] task: tag k8s pause images – failed, message: All items completed

68 Oct 11 2018 08:37:32: [kubernetes] [192.168.213.131] task: setup node – ok, message:

69 Oct 11 2018 08:37:32: [kubernetes] [192.168.213.129] task: setup node – skipped, message:

70 Oct 11 2018 08:37:33: [kubernetes] [192.168.213.131] task: tag k8s pause images – failed, message: All items completed

71 Oct 11 2018 08:37:33: [kubernetes] [all] task: ending – failed, message:

同上,不知道你是不是也做了update?建议你初始化安装后先不着急做更新再试试看,从你的日志来看,ansible在拷贝文件时出现错误,可能和你的python版本有关系。全部按照好了,再做yum update是不冲突的。

到这不动了, 180 部署机、181 master、183 node、186 harbor

99 Oct 11 2018 15:13:48: [kubernetes] [192.168.88.181] task: copy crt & key – ok, message: All items completed

100 Oct 11 2018 15:14:44: [kubernetes] [192.168.88.181] task: setup – failed, message: non-zero return code

101 Oct 11 2018 15:14:44: [kubernetes] [192.168.88.183] task: setup node – ok, message:

102 Oct 11 2018 15:14:44: [kubernetes] [192.168.88.183] task: pull k8s pause images – ok, message: All items completed

103 Oct 11 2018 15:14:44: [kubernetes] [192.168.88.183] task: tag k8s pause images – ok, message: All items completed

104 Oct 11 2018 15:14:45: [kubernetes] [192.168.88.183] task: copy k8s admin.conf – ok, message: All items completed

———————————-

[GIN] 2018/10/11 – 15:13:35 | 200 | 28.774365ms | 127.0.0.1 | POST /v1/notify

I1011 15:13:36.267127 5 main.go:181] &{map[msg: changed:true] Oct 11 2018 15:13:36 {docker login ok} kubernetes processing 192.168.88.181}

[GIN] 2018/10/11 – 15:13:36 | 200 | 27.545157ms | 127.0.0.1 | POST /v1/notify

I1011 15:13:38.497051 5 main.go:181] &{map[msg:All items completed changed:false] Oct 11 2018 15:13:38 {tag images ok} kubernetes processing 192.168.88.181}

[GIN] 2018/10/11 – 15:13:38 | 200 | 27.922322ms | 127.0.0.1 | POST /v1/notify

I1011 15:13:41.942327 5 main.go:181] &{map[msg:All items completed changed:true] Oct 11 2018 15:13:41 {push images ok} kubernetes processing 192.168.88.181}

[GIN] 2018/10/11 – 15:13:41 | 200 | 27.278648ms | 127.0.0.1 | POST /v1/notify

I1011 15:13:42.248301 5 main.go:181] &{map[changed:false msg:All items completed] Oct 11 2018 15:13:42 {pull k8s pause images ok} kubernetes processing 192.168.88.181}

[GIN] 2018/10/11 – 15:13:42 | 200 | 25.530531ms | 127.0.0.1 | POST /v1/notify

I1011 15:13:42.540002 5 main.go:181] &{map[changed:false msg:All items completed] Oct 11 2018 15:13:42 {tag k8s pause images ok} kubernetes processing 192.168.88.181}

[GIN] 2018/10/11 – 15:13:42 | 200 | 47.731959ms | 127.0.0.1 | POST /v1/notify

I1011 15:13:44.132523 5 main.go:181] &{map[msg:All items completed changed:false] Oct 11 2018 15:13:44 {generate kubeadm config ok} kubernetes processing 192.168.88.181}

[GIN] 2018/10/11 – 15:13:44 | 200 | 28.180382ms | 127.0.0.1 | POST /v1/notify

I1011 15:13:48.573108 5 main.go:181] &{map[msg:All items completed changed:false] Oct 11 2018 15:13:48 {copy crt & key ok} kubernetes processing 192.168.88.181}

[GIN] 2018/10/11 – 15:13:48 | 200 | 23.20246ms | 127.0.0.1 | POST /v1/notify

I1011 15:14:44.324118 5 main.go:181] &{map[msg:non-zero return code changed:true] Oct 11 2018 15:14:44 {setup failed} kubernetes processing 192.168.88.181}

[GIN] 2018/10/11 – 15:14:44 | 200 | 18.586765ms | 127.0.0.1 | POST /v1/notify

I1011 15:14:44.347609 5 main.go:181] &{map[changed:false msg:] Oct 11 2018 15:14:44 {setup node ok} kubernetes processing 192.168.88.183}

[GIN] 2018/10/11 – 15:14:44 | 200 | 7.817755ms | 127.0.0.1 | POST /v1/notify

I1011 15:14:44.670586 5 main.go:181] &{map[msg:All items completed changed:false] Oct 11 2018 15:14:44 {pull k8s pause images ok} kubernetes processing 192.168.88.183}

[GIN] 2018/10/11 – 15:14:44 | 200 | 24.587385ms | 127.0.0.1 | POST /v1/notify

I1011 15:14:44.954076 5 main.go:181] &{map[msg:All items completed changed:false] Oct 11 2018 15:14:44 {tag k8s pause images ok} kubernetes processing 192.168.88.183}

[GIN] 2018/10/11 – 15:14:44 | 200 | 24.496933ms | 127.0.0.1 | POST /v1/notify

I1011 15:14:45.280108 5 main.go:181] &{map[changed:false msg:All items completed] Oct 11 2018 15:14:45 {copy k8s admin.conf ok} kubernetes processing 192.168.88.183}

[GIN] 2018/10/11 – 15:14:45 | 200 | 26.571485ms | 127.0.0.1 | POST /v1/notify

统一回复:因为Ansible是依赖python的,所以程序是否能成功,很大程度上依赖于OS环境的“干净”。我们的建议是先拿CentOS 7.5 Minimal标准安装模型,然后什么也不要做就开始做部署,这种情况下我们还没有碰见过失败。

如果系统被安装或升级过python或其它软件包,情况就比较复杂一些了。

安装成功,dashboard页面不能访问?1.12.1版本

用命令 kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk ‘{print $1}’)

可以获得dashboard的访问令牌,部署后dashboard的访问端口是NodePort 30300,所以请访问随意一台Worker节点的30300,然后使用上述令牌即可登录。

谢谢回复。

现在的问题是通过 https://node-ip:30300 无法打开网页。

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-dns ClusterIP 10.96.0.10 53/UDP,53/TCP 11h

service/kubernetes-dashboard NodePort 10.100.82.205 8443:30300/TCP 11h

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/kube-flannel-ds 5 5 3 5 3 beta.kubernetes.io/arch=amd64 11h

daemonset.apps/kube-proxy 5 5 3 5 3 11h

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/coredns 2 2 2 2 11h

deployment.apps/kubernetes-dashboard 1 1 1 1 11h

NAME DESIRED CURRENT READY AGE

replicaset.apps/coredns-7f5cd54cfc 2 2 2 11h

replicaset.apps/kubernetes-dashboard-577d54cbb8 1 1 1 11h

dashboard日志:

2018/10/13 03:14:38 Metric client health check failed: unknown (get services heapster). Retrying in 30 seconds.

2018/10/13 03:15:03 http: TLS handshake error from 10.244.4.0:4919: EOF

2018/10/13 03:15:08 Metric client health check failed: unknown (get services heapster). Retrying in 30 seconds.

2018/10/13 03:15:39 Metric client health check failed: unknown (get services heapster). Retrying in 30 seconds.

2018/10/13 03:16:43 Metric client health check failed: unknown (get services heapster). Retrying in 30 seconds.

2018/10/13 03:18:13 Metric client health check failed: the server has asked for the client to provide credentials (get services heapster). Retrying in 30 seconds.

2018/10/13 03:18:43 Metric client health check failed: unknown (get services heapster). Retrying in 30 seconds.

Dashboard你最后贴的错误信息并不影响使用的,另外https的访问,现在只能通过Firefox浏览器访问了,自建证书Chome浏览器会被拒绝(Google的安全策略太严格)

试了Firefox浏览器,也是打不开网页。跟1.21.1版本有关系么

纯净Centos7.5 Min版本

4台服务器,1运行Breeze,1Harbor 1Master 1Node

最后kubernetes安装失败,错误信息如下:

83 Oct 13 2018 14:38:15: [kubernetes] [192.168.2.250] task: copy k8s images – ok, message: All items completed

84 Oct 13 2018 14:39:19: [kubernetes] [192.168.2.250] task: load k8s images – ok, message: All items completed

85 Oct 13 2018 14:39:20: [kubernetes] [192.168.2.250] task: docker login – ok, message:

86 Oct 13 2018 14:39:24: [kubernetes] [192.168.2.250] task: tag images – ok, message: All items completed

87 Oct 13 2018 14:40:15: [kubernetes] [192.168.2.250] task: push images – ok, message: All items completed

88 Oct 13 2018 14:40:15: [kubernetes] [192.168.2.250] task: pull k8s pause images – ok, message: All items completed

89 Oct 13 2018 14:40:16: [kubernetes] [192.168.2.250] task: tag k8s pause images – ok, message: All items completed

90 Oct 13 2018 14:40:17: [kubernetes] [192.168.2.250] task: generate kubeadm config – ok, message: All items completed

91 Oct 13 2018 14:40:21: [kubernetes] [192.168.2.250] task: copy crt & key – ok, message: All items completed

92 Oct 13 2018 14:45:41: [kubernetes] [192.168.2.250] task: setup – failed, message: non-zero return code

93 Oct 13 2018 14:45:41: [kubernetes] [192.168.2.100] task: setup node – ok, message:

94 Oct 13 2018 14:45:54: [kubernetes] [192.168.2.100] task: pull k8s pause images – ok, message: All items completed

95 Oct 13 2018 14:45:55: [kubernetes] [192.168.2.100] task: tag k8s pause images – ok, message: All items completed

96 Oct 13 2018 14:45:56: [kubernetes] [192.168.2.100] task: copy k8s admin.conf – failed, message: All items completed

97 Oct 13 2018 14:45:56: [kubernetes] [all] task: ending – failed, message:

61 Oct 14 2018 11:35:39: [kubernetes] [192.168.52.134] task: pull k8s pause images – ok, message: All items completed

62 Oct 14 2018 11:35:39: [kubernetes] [192.168.52.134] task: tag k8s pause images – ok, message: All items completed

63 Oct 14 2018 11:35:39: [kubernetes] [192.168.52.134] task: copy k8s admin.conf – ok, message: All items completed

64 Oct 14 2018 11:35:39: [kubernetes] [192.168.52.134] task: setup node – ok, message:

65 Oct 14 2018 11:35:40: [kubernetes] [192.168.52.134] task: setup node – ok, message:

66 Oct 14 2018 11:35:40: [kubernetes] [192.168.52.134] task: restart kubelet – ok, message:

67 Oct 14 2018 11:35:40: [kubernetes] [all] task: ending – failed, message:

关于使用中碰到的问题,欢迎大家直接去 https://github.com/wise2c-devops/breeze 提issue,我会记得做答复的。另外也可以直接给我写email (peng.alan@gmail.com),这里贴日志有时候不是很完整较难分析。

天,你们公司真的用这玩意装k8s?连超时都没做

对此工具有任何改进意见,欢迎去github提issue或直接提交PR一起改进,谢谢!

到最后一步安装kubernetes时出错了,帮帮忙一下

170 Oct 16 2018 04:46:53: [kubernetes] [10.1.5.109] task: generate kubeadm config – ok, message: All items completed

171 Oct 16 2018 04:46:53: [kubernetes] [10.1.5.108] task: generate kubeadm config – ok, message: All items completed

172 Oct 16 2018 04:46:53: [kubernetes] [10.1.5.110] task: generate kubeadm config – ok, message: All items completed

173 Oct 16 2018 04:47:01: [kubernetes] [10.1.5.109] task: copy crt & key – ok, message: All items completed

174 Oct 16 2018 04:47:01: [kubernetes] [10.1.5.108] task: copy crt & key – ok, message: All items completed

175 Oct 16 2018 04:47:02: [kubernetes] [10.1.5.110] task: copy crt & key – ok, message: All items completed

176 Oct 16 2018 04:47:03: [kubernetes] [10.1.5.108] task: setup – failed, message: non-zero return code

177 Oct 16 2018 04:47:03: [kubernetes] [10.1.5.110] task: setup – failed, message: non-zero return code

178 Oct 16 2018 04:47:03: [kubernetes] [10.1.5.109] task: setup – failed, message: non-zero return code

179 Oct 16 2018 04:47:03: [kubernetes] [10.1.5.111] task: setup node – ok, message:

180 Oct 16 2018 04:47:05: [kubernetes] [10.1.5.111] task: pull k8s pause images – ok, message: All items completed

181 Oct 16 2018 04:47:06: [kubernetes] [10.1.5.111] task: tag k8s pause images – ok, message: All items completed

182 Oct 16 2018 04:47:06: [kubernetes] [10.1.5.111] task: copy k8s admin.conf – failed, message: All items completed

183 Oct 16 2018 04:47:06: [kubernetes] [all] task: ending – failed, message:

安装成功,1 master,1worker,1 registry,但是dashboard页面不能访问 ,https://nodeip:30300显示不出页面,Firefox,Edge,IE 都试了,貌似30300端口访问不了。什么原因?

安装成功,1 master,1worker,1 registry,但是用nodeip访问dashboard页面不能访问 ,但是用masterd的IP访问https://masterip:30300可以显示。什么原因?

nodePort这种既然master上都可以访问,没理由worker节点上没法访问。这个故障实在奇怪,那么你在worker节点本机curl -k https://localhost:30300可以么?

[root@k8s_worker_001 ~]# curl -k https://localhost:30300

curl: (7) Failed connect to localhost:30300; 拒绝连接

最后一步,安装kubernetes时出错了,详细信息如下:

ok: [10.1.5.110] => (item={u’dest’: u’/etc/kubernetes/pki’, u’src’: u’file/apiserver-kubelet-client.crt’})

TASK [setup] *******************************************************************

fatal: [10.1.5.108]: FAILED! => {“changed”: true, “cmd”: “kubeadm init –config /var/tmp/wise2c/kubernetes/kubeadm.conf”, “delta”: “0:00:55.539069”, “end”: “2018-10-16 21:53:05.833640”, “failed”: true, “msg”: “non-zero return code”, “rc”: 2, “start”: “2018-10-16 21:52:10.294571”, “stderr”: “\t[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly\n\t[WARNING RequiredIPVSKernelModulesAvailable]: the IPVS proxier will not be used, because the following required kernel modules are not loaded: [ip_vs_wrr ip_vs_sh ip_vs ip_vs_rr] or no builtin kernel ipvs support: map[ip_vs:{} ip_vs_rr:{} ip_vs_wrr:{} ip_vs_sh:{} nf_conntrack_ipv4:{}]\nyou can solve this problem with following methods:\n 1. Run ‘modprobe — ‘ to load missing kernel modules;\n2. Provide the missing builtin kernel ipvs support\n\n[preflight] Some fatal errors occurred:\n\t[ERROR ExternalEtcdVersion]: Get http://10.1.5.108:2379/version: net/http: request canceled (Client.Timeout exceeded while awaiting headers)\n[preflight] If you know what you are doing, you can make a check non-fatal with `–ignore-preflight-errors=…`”, “stderr_lines”: [“\t[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly”, “\t[WARNING RequiredIPVSKernelModulesAvailable]: the IPVS proxier will not be used, because the following required kernel modules are not loaded: [ip_vs_wrr ip_vs_sh ip_vs ip_vs_rr] or no builtin kernel ipvs support: map[ip_vs:{} ip_vs_rr:{} ip_vs_wrr:{} ip_vs_sh:{} nf_conntrack_ipv4:{}]”, “you can solve this problem with following methods:”, ” 1. Run ‘modprobe — ‘ to load missing kernel modules;”, “2. Provide the missing builtin kernel ipvs support”, “”, “[preflight] Some fatal errors occurred:”, “\t[ERROR ExternalEtcdVersion]: Get http://10.1.5.108:2379/version: net/http: request canceled (Client.Timeout exceeded while awaiting headers)”, “[preflight] If you know what you are doing, you can make a check non-fatal with `–ignore-preflight-errors=…`”], “stdout”: “[init] using Kubernetes version: v1.12.1\n[preflight] running pre-flight checks”, “stdout_lines”: [“[init] using Kubernetes version: v1.12.1”, “[preflight] running pre-flight checks”]}

一主2从,安装最后kubernetes时报错, [kubernetes] [192.168.18.222] task: docker login – failed, message: Logging into 92.168.18.222:80 for user admin failed – UnixHTTPConnectionPool(host=’localhost’, port=None): Read timed out. (read timeout=60),试了好几次都是这个错误过不去

我已经回复您邮件,这个是您把地址填错了导致的,192不能是92

添加集群这里卡主不动了,哪里能看看日志么

欢迎您发详细操作及截图到 peng.alan@gmail.com 方便我做回复。日志查看方法及常见故障分析可以去

https://github.com/wise2c-devops/breeze首页查看。

Oct 18 2018 10:21:22: [docker] [10.26.23.175] task: distribute ipvs bootload file – ok, message: All items completed

29 Oct 18 2018 10:21:22: [docker] [10.26.92.235] task: distribute ipvs bootload file – ok, message: All items completed

30 Oct 18 2018 10:21:22: [docker] [10.27.71.42] task: distribute ipvs bootload file – ok, message: All items completed

31 Oct 18 2018 10:21:26: [docker] [10.26.23.175] task: install docker – failed, message: All items completed

32 Oct 18 2018 10:21:26: [docker] [10.26.92.235] task: install docker – failed, message: All items completed

33 Oct 18 2018 10:21:27: [docker] [10.27.71.42] task: install docker – failed, message: All items completed

34 Oct 18 2018 10:21:27: [docker] [all] task: ending – failed, message:

为什么安装docker直接就失败了

curl -L https://raw.githubusercontent.com/wise2ck8s/breeze/v1.12.1/docker-compose.yml -o docker-compose.yml

docker-compose up -d 我用上面这个yml 文件地址拉取镜像,拉不下来。卡在160M的时候就不动了。

和9# 报一样的错误

Oct 23 2018 09:01:07: [kubernetes] [192.168.5.111] task: copy crt & key – ok, message: All items completed

就卡在这里不动了

Oct 23 2018 09:00:58: [docker] [10.41.6.142] task: Gathering Facts – unreachable, message: Failed to connect to the host via ssh: Control socket connect(/root/.ansible/cp/9575127c95): Connection refused Failed to connect to new control master

2 Oct 23 2018 09:00:58: [docker] [10.41.6.165] task: Gathering Facts – unreachable, message: Failed to connect to the host via ssh: Control socket connect(/root/.ansible/cp/74567dcbf8): Connection refused Failed to connect to new control master

3 Oct 23 2018 09:00:58: [docker] [10.41.6.168] task: Gathering Facts – unreachable, message: Failed to connect to the host via ssh: Control socket connect(/root/.ansible/cp/862e37072f): Connection refused Failed to connect to new control master

4 Oct 23 2018 09:00:58: [docker] [10.41.6.167] task: Gathering Facts – unreachable, message: Failed to connect to the host via ssh: Control socket connect(/root/.ansible/cp/8d518cb546): Connection refused Failed to connect to new control master

5 Oct 23 2018 09:00:58: [docker] [10.41.6.158] task: Gathering Facts – unreachable, message: Failed to connect to the host via ssh: Control socket connect(/root/.ansible/cp/10eb0815db): Connection refused Failed to connect to new control master

6 Oct 23 2018 09:00:58: [docker] [all] task: ending – failed, message:

RSA都授权认证过了,网上查阅了很多资料也没解决。看到评论说跟Python 有关,但是我的python版本2.7.5 算比较新的了。日志里有显示.ansible目录,是否需要安装ansible?一头雾水,希望版主看到了帮忙解答下,可以联系邮箱:pilinwen@126.com

5 Oct 23 2018 09:00:58: [docker] [10.41.6.158] task: Gathering Facts – unreachable, message: Failed to connect to the host via ssh: Control socket connect(/root/.ansible/cp/10eb0815db): Connection refused Failed to connect to new control master

6 Oct 23 2018 09:00:58: [docker] [all] task: ending – failed, message:

RSA都授权认证过了,网上查阅了很多资料也没解决。看到评论说跟Python 有关,但是我的python版本2.7.5 算比较新的了。日志里有显示.ansible目录,是否需要安装ansible?一头雾水,希望版主看到了帮忙解答下,可以联系邮箱:pilinwen@126.com

大家搜索关注微信公众号【Wise2C】后回复【进群】,睿云小助手会第一时间把拉你进入【 Docker企业落地实践群】,关于Breeze部署工具的问题以及建议我们在群里讨论!

部署页面上提示初始化失败,查看部署机的日志,提示下面的错误,请大神帮忙看看哪的问题?

188

Jan 08 2019 06:54:01: [kubernetes] [192.168.1.61] task: kubeadm init – failed, message: non-zero return code

189

Jan 08 2019 06:54:01: [kubernetes] [192.168.1.64] task: kubeadm init – failed, message: non-zero return code

190

Jan 08 2019 06:54:01: [kubernetes] [192.168.1.62] task: kubeadm init – failed, message: non-zero return code

191

Jan 08 2019 06:54:01: [kubernetes] [all] task: ending – failed, message:

TASK [kubeadm init] ************************************************************

fatal: [192.168.1.61]: FAILED! => {“changed”: true, “cmd”: “kubeadm init –config /var/tmp/wise2c/kubernetes/kubeadm.conf”, “delta”: “0:00:10.762311”, “end”: “2019-01-08 22:56:47.947686”, “msg”: “non-zero return code”, “rc”: 1, “start”: “2019-01-08 22:56:37.185375”, “stderr”: “W0108 22:56:37.471437 17440 common.go:159] WARNING: overriding requested API server bind address: requested \”127.0.0.1\”, actual \”192.168.1.61\”\nI0108 22:56:47.472072 17440 version.go:94] could not fetch a Kubernetes version from the internet: unable to get URL \”https://dl.k8s.io/release/stable-1.txt\”: Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)\nI0108 22:56:47.472103 17440 version.go:95] falling back to the local client version: v1.13.1\n\t[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly\nerror execution phase preflight: [preflight] Some fatal errors occurred:\n\t[ERROR NumCPU]: the number of available CPUs 1 is less than the required 2\n[preflight] If you know what you are doing, you can make a check non-fatal with `–ignore-preflight-errors=…`”, “stderr_lines”: [“W0108 22:56:37.471437 17440 common.go:159] WARNING: overriding requested API server bind address: requested \”127.0.0.1\”, actual \”192.168.1.61\””, “I0108 22:56:47.472072 17440 version.go:94] could not fetch a Kubernetes version from the internet: unable to get URL \”https://dl.k8s.io/release/stable-1.txt\”: Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)”, “I0108 22:56:47.472103 17440 version.go:95] falling back to the local client version: v1.13.1”, “\t[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly”, “error execution phase preflight: [preflight] Some fatal errors occurred:”, “\t[ERROR NumCPU]: the number of available CPUs 1 is less than the required 2”, “[preflight] If you know what you are doing, you can make a check non-fatal with `–ignore-preflight-errors=…`”], “stdout”: “[init] Using Kubernetes version: v1.13.1\n[preflight] Running pre-flight checks”, “stdout_lines”: [“[init] Using Kubernetes version: v1.13.1”, “[preflight] Running pre-flight checks”]}

fatal: [192.168.1.62]: FAILED! => {“changed”: true, “cmd”: “kubeadm init –config /var/tmp/wise2c/kubernetes/kubeadm.conf”, “delta”: “0:00:10.731643”, “end”: “2019-01-08 22:56:47.972533”, “msg”: “non-zero return code”, “rc”: 1, “start”: “2019-01-08 22:56:37.240890”, “stderr”: “W0108 22:56:37.515837 15551 common.go:159] WARNING: overriding requested API server bind address: requested \”127.0.0.1\”, actual \”192.168.1.62\”\nI0108 22:56:47.516577 15551 version.go:94] could not fetch a Kubernetes version from the internet: unable to get URL \”https://dl.k8s.io/release/stable-1.txt\”: Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled (Client.Timeout exceeded while awaiting headers)\nI0108 22:56:47.516598 15551 version.go:95] falling back to the local client version: v1.13.1\n\t[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly\nerror execution phase preflight: [preflight] Some fatal errors occurred:\n\t[ERROR NumCPU]: the number of available CPUs 1 is less than the required 2\n[preflight] If you know what you are doing, you can make a check non-fatal with `–ignore-preflight-errors=…`”, “stderr_lines”: [“W0108 22:56:37.515837 15551 common.go:159] WARNING: overriding requested API server bind address: requested \”127.0.0.1\”, actual \”192.168.1.62\””, “I0108 22:56:47.516577 15551 version.go:94] could not fetch a Kubernetes version from the internet: unable to get URL \”https://dl.k8s.io/release/stable-1.txt\”: Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled (Client.Timeout exceeded while awaiting headers)”, “I0108 22:56:47.516598 15551 version.go:95] falling back to the local client version: v1.13.1”, “\t[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly”, “error execution phase preflight: [preflight] Some fatal errors occurred:”, “\t[ERROR NumCPU]: the number of available CPUs 1 is less than the required 2”, “[preflight] If you know what you are doing, you can make a check non-fatal with `–ignore-preflight-errors=…`”], “stdout”: “[init] Using Kubernetes version: v1.13.1\n[preflight] Running pre-flight checks”, “stdout_lines”: [“[init] Using Kubernetes version: v1.13.1”, “[preflight] Running pre-flight checks”]}

fatal: [192.168.1.64]: FAILED! => {“changed”: true, “cmd”: “kubeadm init –config /var/tmp/wise2c/kubernetes/kubeadm.conf”, “delta”: “0:00:10.783113”, “end”: “2019-01-08 22:56:48.064877”, “msg”: “non-zero return code”, “rc”: 1, “start”: “2019-01-08 22:56:37.281764”, “stderr”: “W0108 22:56:37.630647 15547 common.go:159] WARNING: overriding requested API server bind address: requested \”127.0.0.1\”, actual \”192.168.1.64\”\nI0108 22:56:47.630960 15547 version.go:94] could not fetch a Kubernetes version from the internet: unable to get URL \”https://dl.k8s.io/release/stable-1.txt\”: Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)\nI0108 22:56:47.630991 15547 version.go:95] falling back to the local client version: v1.13.1\n\t[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly\nerror execution phase preflight: [preflight] Some fatal errors occurred:\n\t[ERROR NumCPU]: the number of available CPUs 1 is less than the required 2\n[preflight] If you know what you are doing, you can make a check non-fatal with `–ignore-preflight-errors=…`”, “stderr_lines”: [“W0108 22:56:37.630647 15547 common.go:159] WARNING: overriding requested API server bind address: requested \”127.0.0.1\”, actual \”192.168.1.64\””, “I0108 22:56:47.630960 15547 version.go:94] could not fetch a Kubernetes version from the internet: unable to get URL \”https://dl.k8s.io/release/stable-1.txt\”: Get https://storage.googleapis.com/kubernetes-release/release/stable-1.txt: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)”, “I0108 22:56:47.630991 15547 version.go:95] falling back to the local client version: v1.13.1”, “\t[WARNING Firewalld]: firewalld is active, please ensure ports [6443 10250] are open or your cluster may not function correctly”, “error execution phase preflight: [preflight] Some fatal errors occurred:”, “\t[ERROR NumCPU]: the number of available CPUs 1 is less than the required 2”, “[preflight] If you know what you are doing, you can make a check non-fatal with `–ignore-preflight-errors=…`”], “stdout”: “[init] Using Kubernetes version: v1.13.1\n[preflight] Running pre-flight checks”, “stdout_lines”: [“[init] Using Kubernetes version: v1.13.1”, “[preflight] Running pre-flight checks”]}

PLAY RECAP *********************************************************************

192.168.1.61 : ok=25 changed=8 unreachable=0 failed=1

192.168.1.62 : ok=18 changed=4 unreachable=0 failed=1

192.168.1.64 : ok=18 changed=4 unreachable=0 failed=1

I0108 06:54:01.883120 1 command.go:239] failed at a step

[GIN] 2019/01/08 – 06:54:01 | 200 | 114.06527ms | 127.0.0.1 | POST /v1/notify

[GIN] 2019/01/08 – 06:54:02 | 200 | 1.060998681s | 127.0.0.1 | POST /v1/notify

[GIN] 2019/01/08 – 06:54:02 | 200 | 1.076344156s | 127.0.0.1 | POST /v1/notify