1、统一日志管理的整体方案

通过应用和系统日志可以了解Kubernetes集群内所发生的事情,对于调试问题和监视集群活动来说日志非常有用。对于大部分的应用来说,都会具有某种日志机制。因此,大多数容器引擎同样被设计成支持某种日志机制。对于容器化应用程序来说,最简单和最易接受的日志记录方法是将日志内容写入到标准输出和标准错误流。

但是,容器引擎或运行时提供的本地功能通常不足以支撑完整的日志记录解决方案。例如,如果一个容器崩溃、一个Pod被驱逐、或者一个Node死亡,应用相关者可能仍然需要访问应用程序的日志。因此,日志应该具有独立于Node、Pod或者容器的单独存储和生命周期,这个概念被称为群集级日志记录。群集级日志记录需要一个独立的后端来存储、分析和查询日志。Kubernetes本身并没有为日志数据提供原生的存储解决方案,但可以将许多现有的日志记录解决方案集成到Kubernetes集群中。在Kubernetes中,有三个层次的日志:

- 基础日志

- Node级别的日志

- 群集级别的日志架构

1.1 基础日志

kubernetes基础日志即将日志数据输出到标准输出流,可以使用kubectl logs命令获取容器日志信息。如果Pod中有多个容器,可以通过将容器名称附加到命令来指定要访问哪个容器的日志。例如,在Kubernetes集群中的devops命名空间下有一个名称为nexus3-f5b7fc55c-hq5v7的Pod,就可以通过如下的命令获取日志:

$ kubectl logs nexus3-f5b7fc55c-hq5v7 --namespace=devops

1.2 Node级别的日志

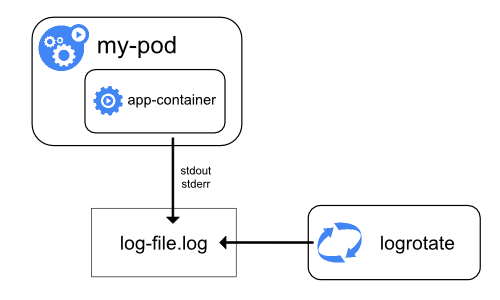

容器化应用写入到stdout和stderr的所有内容都是由容器引擎处理和重定向的。例如,docker容器引擎会将这两个流重定向到日志记录驱动,在Kubernetes中该日志驱动被配置为以json格式写入文件。docker json日志记录驱动将每一行视为单独的消息。当使用docker日志记录驱动时,并不支持多行消息,因此需要在日志代理级别或更高级别上处理多行消息。

默认情况下,如果容器重新启动,kubectl将会保留一个已终止的容器及其日志。如果从Node中驱逐Pod,那么Pod中所有相应的容器也会连同它们的日志一起被驱逐。Node级别的日志中的一个重要考虑是实现日志旋转,这样日志不会消耗Node上的所有可用存储。Kubernetes目前不负责旋转日志,部署工具应该建立一个解决方案来解决这个问题。

在Kubernetes中有两种类型的系统组件:运行在容器中的组件和不在容器中运行的组件。例如:

- Kubernetes调度器和kube-proxy在容器中运行。

- kubelet和容器运行时,例如docker,不在容器中运行。

在带有systemd的机器上,kubelet和容器运行时写入journaId。如果systemd不存在,它们会在/var/log目录中写入.log文件。在容器中的系统组件总是绕过默认的日志记录机制,写入到/var/log目录,它们使用golg日志库。可以找到日志记录中开发文档中那些组件记录严重性的约定。

类似于容器日志,在/var/log目录中的系统组件日志应该被旋转。这些日志被配置为每天由logrotate进行旋转,或者当大小超过100mb时进行旋转。

1.3 集群级别的日志架构

Kubernetes本身没有为群集级别日志记录提供原生解决方案,但有几种常见的方法可以采用:

- 使用运行在每个Node上的Node级别的日志记录代理;

- 在应用Pod中包含一个用于日志记录的sidecar。

- 将日志直接从应用内推到后端。

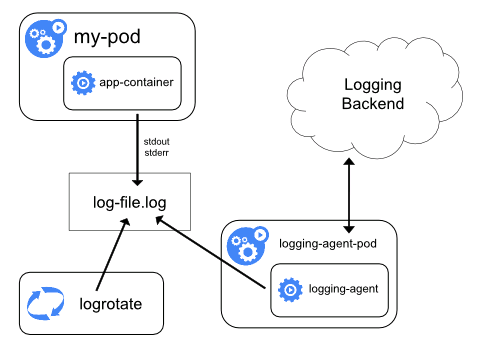

经过综合考虑,本文采用通过在每个Node上包括Node级别的日志记录代理来实现群集级别日志记录。日志记录代理暴露日志或将日志推送到后端的专用工具。通常,logging-agent是一个容器,此容器能够访问该Node上的所有应用程序容器的日志文件。

因为日志记录必须在每个Node上运行,所以通常将它作为DaemonSet副本、或一个清单Pod或Node上的专用本机进程。然而,后两种方法后续将会被放弃。使用Node级别日志记录代理是Kubernetes集群最常见和最受欢迎的方法,因为它只为每个节点创建一个代理,并且不需要对节点上运行的应用程序进行任何更改。但是,Node级别日志记录仅适用于应用程序的标准输出和标准错误。

Kubernetes本身并没有指定日志记录代理,但是有两个可选的日志记录代理与Kubernetes版本打包发布:和谷歌云平台一起使用的Stackdriver和Elasticsearch,两者都使用自定义配置的fluentd作为Node上的代理。在本文的方案中,Logging-agent 采用 Fluentd,而 Logging Backend 采用 Elasticsearch,前端展示采用Grafana。即通过 Fluentd 作为 Logging-agent 收集日志,并推送给后端的Elasticsearch;Grafana从Elasticsearch中获取日志,并进行统一的展示。

2、安装统一日志管理的组件

在本文中采用使用Node日志记录代理的方面进行Kubernetes的统一日志管理,相关的工具采用:

- 日志记录代理(logging-agent):日志记录代理用于从容器中获取日志信息,使用Fluentd;

- 日志记录后台(Logging-Backend):日志记录后台用于处理日志记录代理推送过来的日志,使用Elasticsearch;

- 日志记录展示:日志记录展示用于向用户显示统一的日志信息,使用Kibana。

在Kubernetes中通过了Elasticsearch 附加组件,此组件包括Elasticsearch、Fluentd和Kibana。Elasticsearch是一种负责存储日志并允许查询的搜索引擎。Fluentd从Kubernetes中获取日志消息,并发送到Elasticsearch;而Kibana是一个图形界面,用于查看和查询存储在Elasticsearch中的日志。在安装部署之前,对于环境的要求如下:

2.1 安装部署Elasticsearch

Elasticsearch是一个基于Apache Lucene(TM)的开源搜索和数据分析引擎引擎,Elasticsearch使用Java进行开发,并使用Lucene作为其核心实现所有索引和搜索的功能。它的目的是通过简单的RESTful API来隐藏Lucene的复杂性,从而让全文搜索变得简单。Elasticsearch不仅仅是Lucene和全文搜索,它还提供如下的能力:

- 分布式的实时文件存储,每个字段都被索引并可被搜索;

- 分布式的实时分析搜索引擎;

- 可以扩展到上百台服务器,处理PB级结构化或非结构化数据。

在Elasticsearch中,包含多个索引(Index),相应的每个索引可以包含多个类型(Type),这些不同的类型每个都可以存储多个文档(Document),每个文档又有多个属性。索引 (index) 类似于传统关系数据库中的一个数据库,是一个存储关系型文档的地方。Elasticsearch 使用的是标准的 RESTful API 和 JSON。此外,还构建和维护了很多其他语言的客户端,例如 Java, Python, .NET, 和 PHP。

下面是Elasticsearch的YAML配置文件,在此配置文件中,定义了一个名称为elasticsearch-logging的ServiceAccount,并授予其能够对命名空间、服务和端点读取的访问权限;并以StatefulSet类型部署Elasticsearch。

# RBAC authn and authz

apiVersion: v1

kind: ServiceAccount

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: elasticsearch-logging

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

rules:

- apiGroups:

- ""

resources:

- "services"

- "namespaces"

- "endpoints"

verbs:

- "get"

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

namespace: kube-system

name: elasticsearch-logging

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

subjects:

- kind: ServiceAccount

name: elasticsearch-logging

namespace: kube-system

apiGroup: ""

roleRef:

kind: ClusterRole

name: elasticsearch-logging

apiGroup: ""

---

# Elasticsearch deployment itself

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

version: v6.2.5

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

serviceName: elasticsearch-logging

replicas: 2

selector:

matchLabels:

k8s-app: elasticsearch-logging

version: v6.2.5

template:

metadata:

labels:

k8s-app: elasticsearch-logging

version: v6.2.5

kubernetes.io/cluster-service: "true"

spec:

serviceAccountName: elasticsearch-logging

containers:

- image: k8s.gcr.io/elasticsearch:v6.2.5

name: elasticsearch-logging

resources:

# need more cpu upon initialization, therefore burstable class

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: db

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

volumeMounts:

- name: elasticsearch-logging

mountPath: /data

env:

- name: "NAMESPACE"

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumes:

- name: elasticsearch-logging

emptyDir: {}

# Elasticsearch requires vm.max_map_count to be at least 262144.

# If your OS already sets up this number to a higher value, feel free

# to remove this init container.

initContainers:

- image: alpine:3.6

command: ["/sbin/sysctl", "-w", "vm.max_map_count=262144"]

name: elasticsearch-logging-init

securityContext:

privileged: true

通过执行如下的命令部署Elasticsearch:

$ kubectl create -f {path}/es-statefulset.yaml

下面Elasticsearch的代理服务YAML配置文件,代理服务暴露的端口为9200。

apiVersion: v1

kind: Service

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

kubernetes.io/name: "Elasticsearch"

spec:

ports:

- port: 9200

protocol: TCP

targetPort: db

selector:

k8s-app: elasticsearch-logging

通过执行如下的命令部署Elasticsearch的代理服务:

$ kubectl create -f {path}/es-service.yaml

2.2 安装部署Fluentd

Fluentd是一个开源数据收集器,通过它能对数据进行统一收集和消费,能够更好地使用和理解数据。Fluentd将数据结构化为JSON,从而能够统一处理日志数据,包括:收集、过滤、缓存和输出。Fluentd是一个基于插件体系的架构,包括输入插件、输出插件、过滤插件、解析插件、格式化插件、缓存插件和存储插件,通过插件可以扩展和更好的使用Fluentd。

Fluentd的整体处理过程如下,通过Input插件获取数据,并通过Engine进行数据的过滤、解析、格式化和缓存,最后通过Output插件将数据输出给特定的终端。

在本文中, Fluentd 作为 Logging-agent 进行日志收集,并将收集到的日子推送给后端的Elasticsearch。对于Kubernetes来说,DaemonSet确保所有(或一些)Node会运行一个Pod副本。因此,Fluentd被部署为DaemonSet,它将在每个节点上生成一个pod,以读取由kubelet,容器运行时和容器生成的日志,并将它们发送到Elasticsearch。为了使Fluentd能够工作,每个Node都必须标记beta.kubernetes.io/fluentd-ds-ready=true。

下面是Fluentd的ConfigMap配置文件,此文件定义了Fluentd所获取的日志数据源,以及将这些日志数据输出到Elasticsearch中。

kind: ConfigMap apiVersion: v1 metadata: name: fluentd-es-config-v0.1.4 namespace: kube-system labels: addonmanager.kubernetes.io/mode: Reconcile data: system.conf: |- <system> root_dir /tmp/fluentd-buffers/ </system> containers.input.conf: |- <source> @id fluentd-containers.log @type tail path /var/log/containers/*.log pos_file /var/log/es-containers.log.pos time_format %Y-%m-%dT%H:%M:%S.%NZ tag raw.kubernetes.* read_from_head true <parse> @type multi_format <pattern> format json time_key time time_format %Y-%m-%dT%H:%M:%S.%NZ </pattern> <pattern> format /^(?<time>.+) (?<stream>stdout|stderr) [^ ]* (?<log>.*)$/ time_format %Y-%m-%dT%H:%M:%S.%N%:z </pattern> </parse> </source> # Detect exceptions in the log output and forward them as one log entry. <match raw.kubernetes.**> @id raw.kubernetes @type detect_exceptions remove_tag_prefix raw message log stream stream multiline_flush_interval 5 max_bytes 500000 max_lines 1000 </match> output.conf: |- # Enriches records with Kubernetes metadata <filter kubernetes.**> @type kubernetes_metadata </filter> <match **> @id elasticsearch @type elasticsearch @log_level info include_tag_key true host elasticsearch-logging port 9200 logstash_format true <buffer> @type file path /var/log/fluentd-buffers/kubernetes.system.buffer flush_mode interval retry_type exponential_backoff flush_thread_count 2 flush_interval 5s retry_forever retry_max_interval 30 chunk_limit_size 2M queue_limit_length 8 overflow_action block </buffer> </match>

通过执行如下的命令创建Fluentd的ConfigMap:

$ kubectl create -f {path}/fluentd-es-configmap.yaml

Fluentd本身的YAML配置文件如下所示:

apiVersion: v1 kind: ServiceAccount metadata: name: fluentd-es namespace: kube-system labels: k8s-app: fluentd-es kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: fluentd-es labels: k8s-app: fluentd-es kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile rules: - apiGroups: - "" resources: - "namespaces" - "pods" verbs: - "get" - "watch" - "list" --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: fluentd-es labels: k8s-app: fluentd-es kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile subjects: - kind: ServiceAccount name: fluentd-es namespace: kube-system apiGroup: "" roleRef: kind: ClusterRole name: fluentd-es apiGroup: "" --- apiVersion: apps/v1 kind: DaemonSet metadata: name: fluentd-es-v2.2.0 namespace: kube-system labels: k8s-app: fluentd-es version: v2.2.0 kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile spec: selector: matchLabels: k8s-app: fluentd-es version: v2.2.0 template: metadata: labels: k8s-app: fluentd-es kubernetes.io/cluster-service: "true" version: v2.2.0 # This annotation ensures that fluentd does not get evicted if the node # supports critical pod annotation based priority scheme. # Note that this does not guarantee admission on the nodes (#40573). annotations: scheduler.alpha.kubernetes.io/critical-pod: '' seccomp.security.alpha.kubernetes.io/pod: 'docker/default' spec: priorityClassName: system-node-critical serviceAccountName: fluentd-es containers: - name: fluentd-es image: k8s.gcr.io/fluentd-elasticsearch:v2.2.0 env: - name: FLUENTD_ARGS value: --no-supervisor -q resources: limits: memory: 500Mi requests: cpu: 100m memory: 200Mi volumeMounts: - name: varlog mountPath: /var/log - name: varlibdockercontainers mountPath: /var/lib/docker/containers readOnly: true - name: config-volume mountPath: /etc/fluent/config.d nodeSelector: beta.kubernetes.io/fluentd-ds-ready: "true" terminationGracePeriodSeconds: 30 volumes: - name: varlog hostPath: path: /var/log - name: varlibdockercontainers hostPath: path: /var/lib/docker/containers - name: config-volume configMap: name: fluentd-es-config-v0.1.4

通过执行如下的命令部署Fluentd:

$ kubectl create -f {path}/fluentd-es-ds.yaml

2.3 安装部署Kibana

Kibana是一个开源的分析与可视化平台,被设计用于和Elasticsearch一起使用的。通过kibana可以搜索、查看和交互存放在Elasticsearch中的数据,利用各种不同的图表、表格和地图等,Kibana能够对数据进行分析与可视化。Kibana部署的YAML如下所示,通过环境变量ELASTICSEARCH_URL,指定所获取日志数据的Elasticsearch服务,此处为:http://elasticsearch-logging:9200,elasticsearch.cattle-logging是elasticsearch在Kubernetes中代理服务的名称。在Fluented配置文件中,有下面的一些关键指令:

- source指令确定输入源。

- match指令确定输出目标。

- filter指令确定事件处理管道。

- system指令设置系统范围的配置。

- label指令将输出和过滤器分组以进行内部路由

- @include指令包含其他文件。

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana-logging

namespace: kube-system

labels:

k8s-app: kibana-logging

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconcile

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kibana-logging

template:

metadata:

labels:

k8s-app: kibana-logging

annotations:

seccomp.security.alpha.kubernetes.io/pod: 'docker/default'

spec:

containers:

- name: kibana-logging

image: docker.elastic.co/kibana/kibana-oss:6.2.4

resources:

# need more cpu upon initialization, therefore burstable class

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch-logging:9200

ports:

- containerPort: 5601

name: ui

protocol: TCP

通过执行如下的命令部署Kibana的代理服务:

$ kubectl create -f {path}/kibana-deployment.yaml

下面Kibana的代理服务YAML配置文件,代理服务的类型为NodePort。

apiVersion: v1 kind: Service metadata: name: kibana-logging namespace: kube-system labels: k8s-app: kibana-logging kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Kibana" spec: type: NodePort ports: - port: 5601 protocol: TCP targetPort: ui selector: k8s-app: kibana-logging

通过执行如下的命令部署Kibana的代理服务:

$ kubectl create -f {path}/kibana-service.yaml

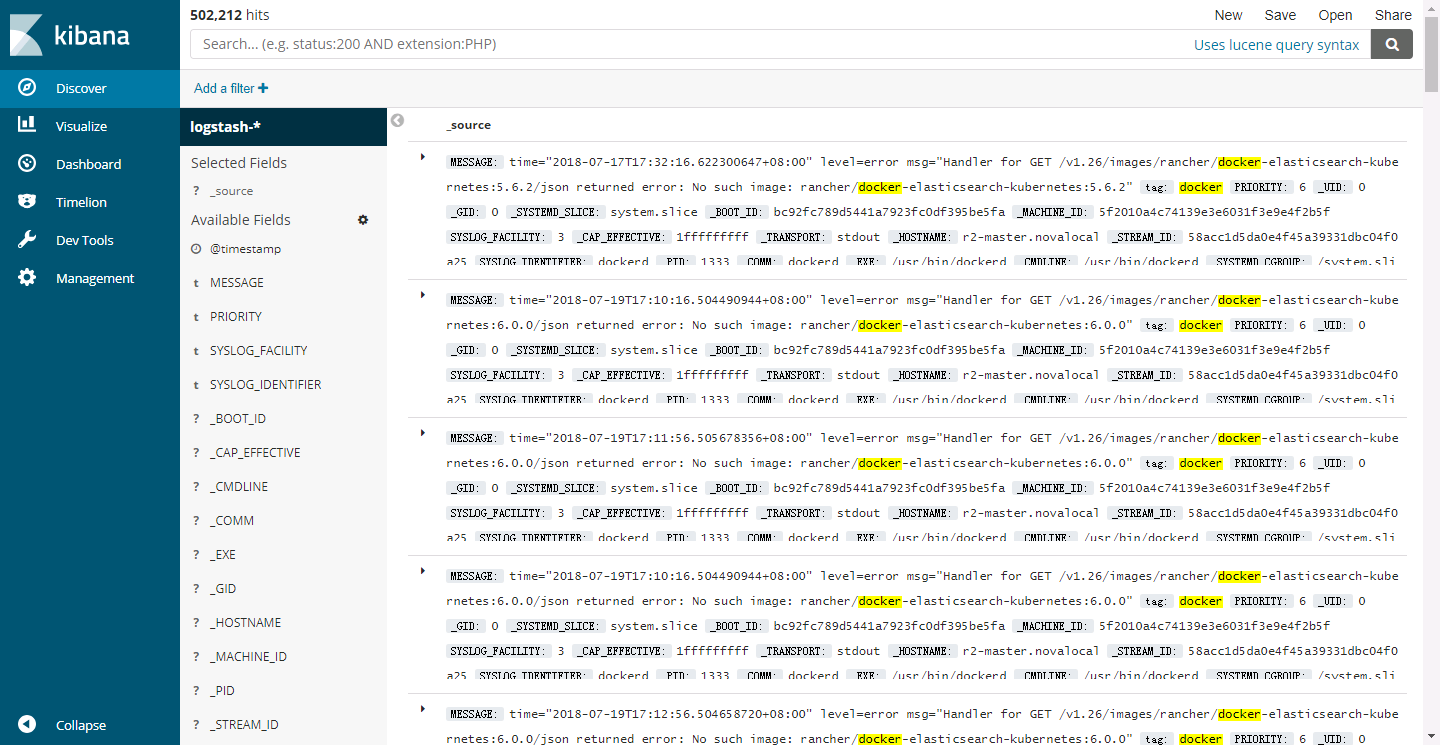

3、日志数据展示

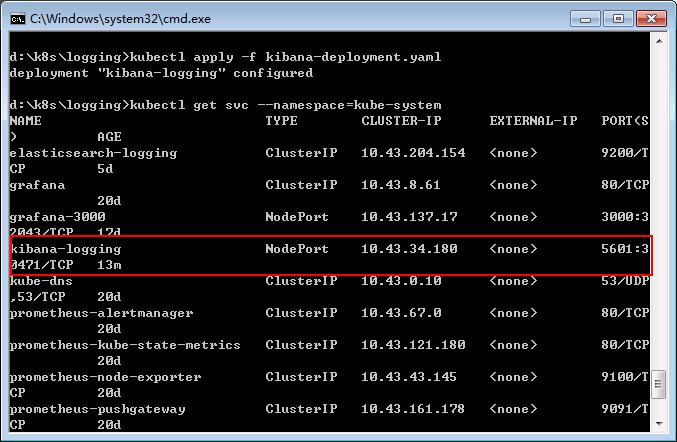

通过如下命令获取Kibana的对外暴露的端口:

$ kubectl get svc --namespace=kube-system

从输出的信息可以知道,kibana对外暴露的端口为30471,因此在Kubernetes集群外可以通过:http://{NodeIP}:30471 访问kibana。

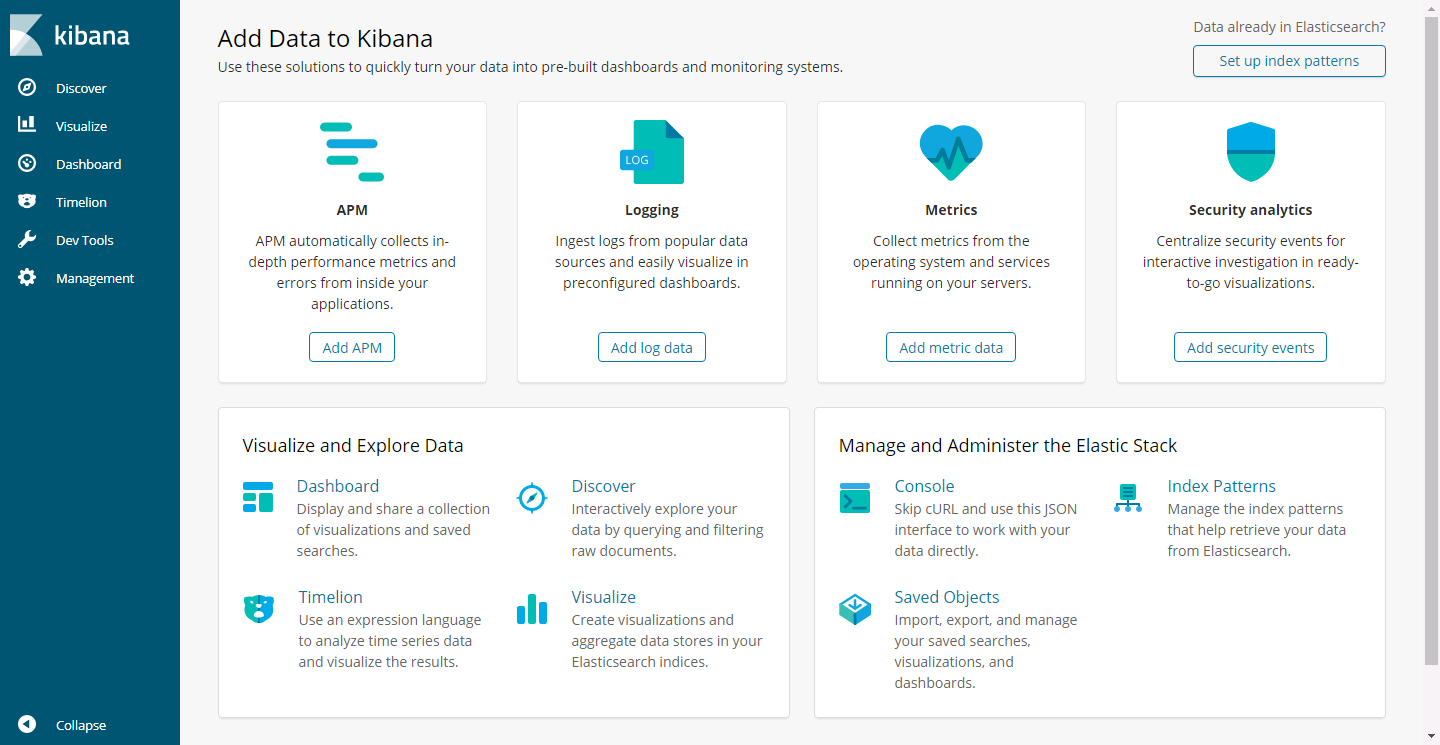

通过点击“Discover”,就能够实时看看从容器中获取到的日志信息:

参考资料

1.《Logging Architecture》地址:https://kubernetes.io/docs/concepts/cluster-administration/logging/

2.《Kubernetes Logging with Fluentd》地址:https://docs.fluentd.org/v0.12/articles/kubernetes-fluentd

3.《Quickstart Guide》地址:https://docs.fluentd.org/v0.12/articles/quickstart

4.《fluentd-elasticsearch》地址:https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/fluentd-elasticsearch

5.《Elasticsearch》地址:https://www.elastic.co/products/elasticsearch

6.《What is Fluentd?》地址:https://www.fluentd.org/architecture

7.《Configuration File Syntax》地址:https://docs.fluentd.org/v1.0/articles/config-file

8.《A Practical Introduction to Elasticsearch》地址:https://www.elastic.co/blog/a-practical-introduction-to-elasticsearch

作者简介:

季向远,北京神舟航天软件技术有限公司产品经理。本文版权归原作者所有。

Kubernetes中文社区

Kubernetes中文社区

为什么我报这个错误:望指点一二,Error: failed to start container “fluentd-es”: Error response from daemon: linux mounts: Path /var/lib/docker/containers is mounted on /var/lib/docker/containers but it is not a shared or slave mount.

为什么我看不到日志,我kuber-service 改成了 default

[root@k8s-master ~]# kubectl logs elasticsearch-logging-1 -n kube-system

OpenJDK 64-Bit Server VM warning: Option UseConcMarkSweepGC was deprecated in version 9.0 and will likely be removed in a future release.

{“type”: “server”, “timestamp”: “2020-08-27T10:38:56,677+0000”, “level”: “INFO”, “component”: “o.e.e.NodeEnvironment”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “using [1] data paths, mounts [[/ (overlay)]], net usable_space [853.4gb], net total_space [872gb], types [overlay]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:38:56,678+0000”, “level”: “INFO”, “component”: “o.e.e.NodeEnvironment”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “heap size [1015.6mb], compressed ordinary object pointers [true]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:38:56,680+0000”, “level”: “INFO”, “component”: “o.e.n.Node”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “node name [elasticsearch-logging-1], node ID [OrCnz-_GRJuqgz91tw8QjQ], cluster name [docker-cluster]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:38:56,680+0000”, “level”: “INFO”, “component”: “o.e.n.Node”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “version[7.2.0], pid[1], build[default/docker/508c38a/2019-06-20T15:54:18.811730Z], OS[Linux/4.4.233-1.el7.elrepo.x86_64/amd64], JVM[Oracle Corporation/OpenJDK 64-Bit Server VM/12.0.1/12.0.1+12]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:38:56,680+0000”, “level”: “INFO”, “component”: “o.e.n.Node”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “JVM home [/usr/share/elasticsearch/jdk]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:38:56,681+0000”, “level”: “INFO”, “component”: “o.e.n.Node”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “JVM arguments [-Xms1g, -Xmx1g, -XX:+UseConcMarkSweepGC, -XX:CMSInitiatingOccupancyFraction=75, -XX:+UseCMSInitiatingOccupancyOnly, -Des.networkaddress.cache.ttl=60, -Des.networkaddress.cache.negative.ttl=10, -XX:+AlwaysPreTouch, -Xss1m, -Djava.awt.headless=true, -Dfile.encoding=UTF-8, -Djna.nosys=true, -XX:-OmitStackTraceInFastThrow, -Dio.netty.noUnsafe=true, -Dio.netty.noKeySetOptimization=true, -Dio.netty.recycler.maxCapacityPerThread=0, -Dlog4j.shutdownHookEnabled=false, -Dlog4j2.disable.jmx=true, -Djava.io.tmpdir=/tmp/elasticsearch-10012769287542693496, -XX:+HeapDumpOnOutOfMemoryError, -XX:HeapDumpPath=data, -XX:ErrorFile=logs/hs_err_pid%p.log, -Xlog:gc*,gc+age=trace,safepoint:file=logs/gc.log:utctime,pid,tags:filecount=32,filesize=64m, -Djava.locale.providers=COMPAT, -Des.cgroups.hierarchy.override=/, -Dio.netty.allocator.type=unpooled, -XX:MaxDirectMemorySize=536870912, -Des.path.home=/usr/share/elasticsearch, -Des.path.conf=/usr/share/elasticsearch/config, -Des.distribution.flavor=default, -Des.distribution.type=docker, -Des.bundled_jdk=true]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,652+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [aggs-matrix-stats]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,652+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [analysis-common]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,652+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [data-frame]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,652+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [ingest-common]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,652+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [ingest-geoip]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,653+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [ingest-user-agent]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,653+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [lang-expression]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,653+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [lang-mustache]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,653+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [lang-painless]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,653+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [mapper-extras]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,653+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [parent-join]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,653+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [percolator]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,653+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [rank-eval]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,653+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [reindex]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,654+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [repository-url]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,654+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [transport-netty4]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,654+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-ccr]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,654+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-core]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,654+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-deprecation]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,654+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-graph]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,654+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-ilm]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,654+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-logstash]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,654+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-ml]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,655+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-monitoring]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,655+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-rollup]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,655+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-security]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,655+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-sql]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,655+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “loaded module [x-pack-watcher]” }

{“type”: “server”, “timestamp”: “2020-08-27T10:39:01,655+0000”, “level”: “INFO”, “component”: “o.e.p.PluginsService”, “cluster.name”: “docker-cluster”, “node.name”: “elasticsearch-logging-1”, “message”: “no plugins loaded” }