| 导语

- https://github.com/kubernetes/community/pull/1054

- https://github.com/kubernetes/community/pull/1140

- https://github.com/kubernetes/community/pull/1105

- Kubernetes 1.9和1.10部分代码

| 本地卷

- 更好的利用本地高性能介质(SSD,Flash)提升数据库服务能力 QPS/TPS(其实这个结论未必成立,后面会有赘述)

- 更闭环的运维成本,现在越来越多的数据库支持基于Replicated的技术实现数据多副本和数据一致性(比如MySQL Group Replication / MariaDB Galera Cluster / Percona XtraDB Cluster的),DBA可以处理所有问题,而不在依赖存储工程师或者SA的支持。

apiVersion: v1 kind: PersistentVolume metadata: name: local-pv spec: capacity: storage: 10Gi accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Delete storageClassName: local-storage local: path: /mnt/disks/ssd1

| 原有调度机制的问题

apiVersion: apps/v1beta1 kind: StatefulSet metadata: name: mysql-5.7 spec: replicas: 1 template: metadata: name: mysql-5.7 spec: containers: name: mysql resources: limits: cpu: 5300m memory: 5Gi volumeMounts: - mountPath: /var/lib/mysql name: data volumeClaimTemplates: - metadata: name: data spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi

func (spc *realStatefulPodControl) CreateStatefulPod(set *apps.StatefulSet, pod *v1.Pod) error {

// Create the Pod's PVCs prior to creating the Pod

if err := spc.createPersistentVolumeClaims(set, pod); err != nil {

spc.recordPodEvent("create", set, pod, err)

return err

}

// If we created the PVCs attempt to create the Pod

_, err := spc.client.CoreV1().Pods(set.Namespace).Create(pod)

// sink already exists errors

if apierrors.IsAlreadyExists(err) {

return err

}

spc.recordPodEvent("create", set, pod, err)

return err

}

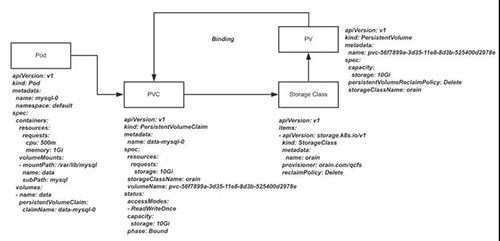

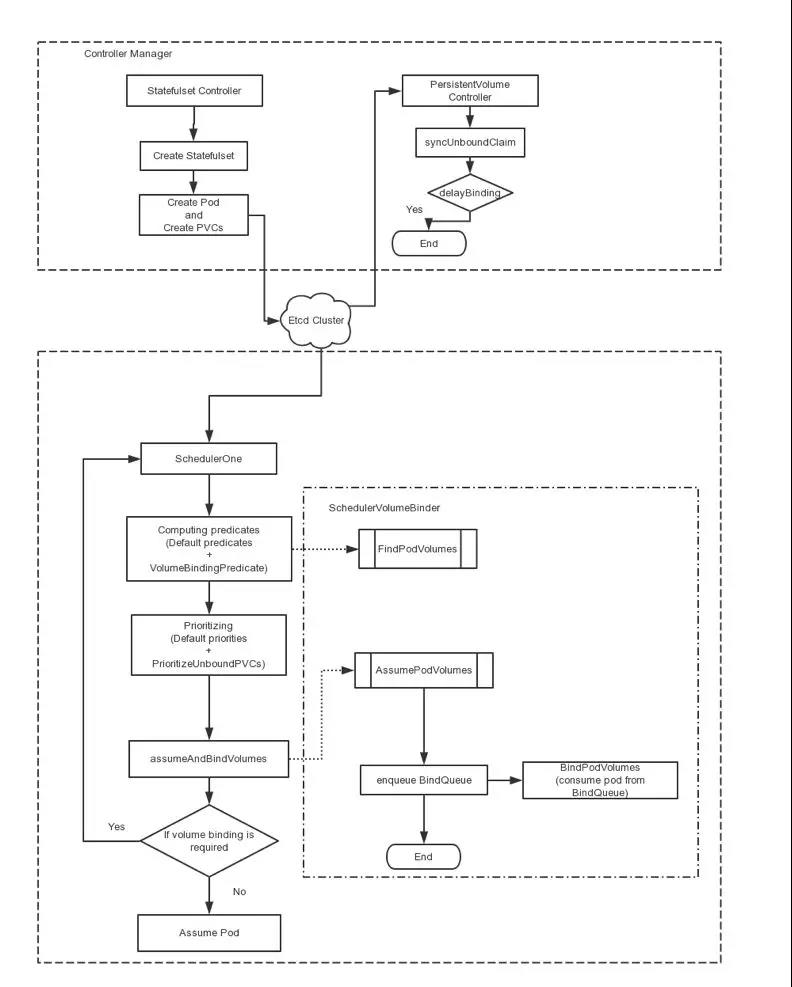

- PVC绑定在Pod调度之前,PersistentVolume Controller不会等待Scheduler调度结果,在Statefulset中PVC先于Pod创建,所以PVC/PV绑定可能完成在Pod调度之前。

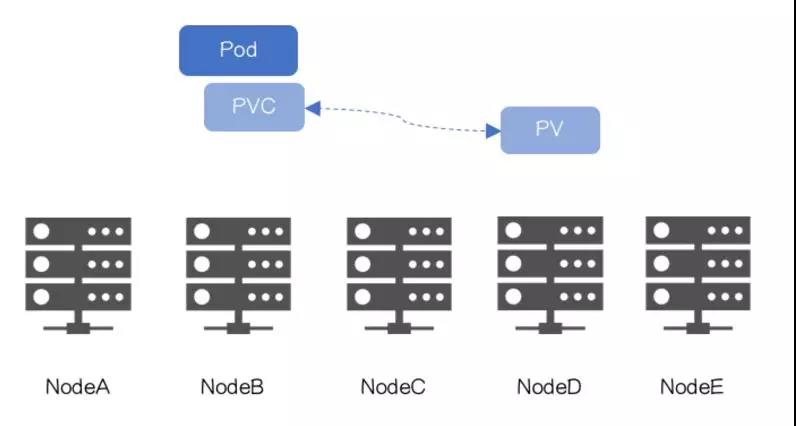

- Scheduler不感知卷的“位置”,仅考虑存储容量、访问权限、存储类型、还有第三方CloudProvider上的限制(譬如在AWS、GCE、Aure上使用Disk数量的限制)

- 尝试让两个老板沟通

- 站队,挑一个老板,只听其中一个的指挥

- 辞职

- 如何标记Topology-Aware Volume

- 如何让PersistentVolume Controller不再参与,同时不影响原有流程

| Feature:VolumeScheduling

"volume.alpha.kubernetes.io/node-affinity": '{

"requiredDuringSchedulingIgnoredDuringExecution": {

"nodeSelectorTerms": [

{ "matchExpressions": [

{ "key": "kubernetes.io/hostname",

"operator": "In",

"values": ["Node1"]

}

]}

]}

}'

- 创建StorageClass “X”(无需Provisioner),并设置StorageClass.VolumeBindingMode = VolumeBindingWaitForFirstConsumer

- PVC.StorageClass设置为X

return *class.VolumeBindingMode == storage.VolumeBindingWaitForFirstConsumer

if claim.Spec.VolumeName == "" {

// User did not care which PV they get.

delayBinding, err := ctrl.shouldDelayBinding(claim)

….

switch {

case delayBinding:

do nothing

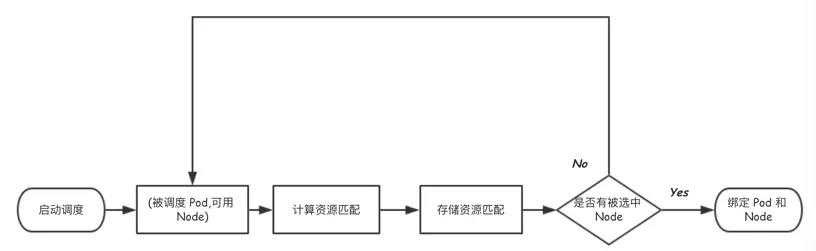

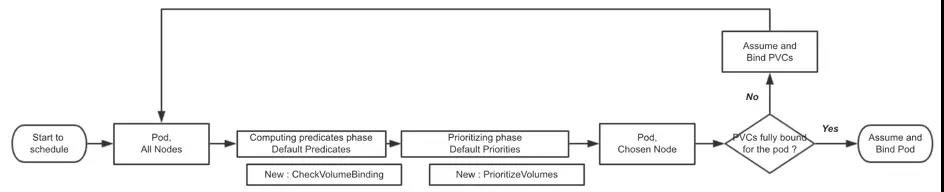

- 执行原有Predicates函数

- 执行添加Predicate函数CheckVolumeBinding校验候选Node是否满足PV物理拓扑(主要逻辑由FindPodVolumes提供):

已绑定PVC:对应PV.NodeAffinity需匹配候选Node,否则该节点需要pass

未绑定PVC:该PVC是否需要延时绑定,如需要,遍历未绑定PV,其NodeAffinity是否匹配候选Node,如满足,记录PVC和PV的映射关系到缓存bindingInfo中,留待节点最终选出来之后进行最终的绑定。

以上都不满足时 : PVC.StorageClass是否可以动态创建 Topology-Aware Volume(又叫 Topology-aware dynamic provisioning)

- 执行原有Priorities函数

- 执行添加Priority函数PrioritizeVolumes。Volume容量匹配越高越好,避免本地存储资源浪费。

- Scheduler选出Node

- 由Scheduler进行API update,完成最终的PVC/PV绑定(异步操作,时间具有不确定性,可能失败)

- 从缓存bindingInfo中获取候选Node上PVC和PV的绑定关系,并通过API完成实际的绑定

- 如果需要StorageClass动态创建,被选出Node将被赋值给StorageClass.topologyKey,作为StorageClass创建Volume的拓扑约束,该功能的实现还在讨论中。

- 绑定被调度Pod和Node

apiVersion: v1

kind: PersistentVolume

metadata:

name: local-pv

annotations:

"volume.alpha.kubernetes.io/node-affinity": '{

"requiredDuringSchedulingIgnoredDuringExecution": {

"nodeSelectorTerms": [

{ "matchExpressions": [

{ "key": "kubernetes.io/hostname",

"operator": "In",

"values": ["k8s-node1-product"]

}

]}

]}

}'

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Delete

storageClassName: local-storage

local:

path: /mnt/disks/ssd1

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: local-storage provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer

apiVersion: apps/v1beta1 kind: StatefulSet metadata: name: mysql-5.7 spec: replicas: 1 template: metadata: name: mysql-5.7 spec: containers: name: mysql resources: limits: cpu: 5300m memory: 5Gi volumeMounts: - mountPath: /var/lib/mysql name: data volumeClaimTemplates: - metadata: annotations: volume.beta.kubernetes.io/storage-class: local-storage name: data spec: accessModes: - ReadWriteOnce resources: requests: storage: 10Gi

|“PersistentLocalVolumes”和“VolumeScheduling”的局限性

- 资源利用率降低。一旦本地存储使用完,即使CPU、Memory剩余再多,该节点也无法提供服务;

- 需要做好本地存储规划,譬如每个节点Volume的数量、容量等,就像原来使用存储时需要把LUN规划好一样,在一个大规模运行的环境,存在落地难度。

- Node不可用后,等待阈值超时,以确定Node无法恢复

- 如确认Node不可恢复,删除PVC,通过解除PVC和PV绑定的方式,解除Pod和Node的绑定

- Scheduler将Pod调度到其他可用Node,PVC重新绑定到可用Node的PV。

- Operator查找MySQL最新备份,拷贝到新的PV

- MySQL集群通过增量同步方式恢复实例数据

- 增量同步变为实时同步,MySQL集群恢复

Kubernetes中文社区

Kubernetes中文社区

赞,但感觉引入localPVC却带来了更多的问题,性能与高可用方面见仁见智吧,如果不差钱还是上共享存储吧。